Follow us on Google News (click on ☆)

Compact dual fish-eye cameras have existed for nearly ten years and offer a limitless 360-degree field of view. Their resolution, which continues to grow, now reaches up to 70 million pixels and provides an excellent field of vision for different types of robots.

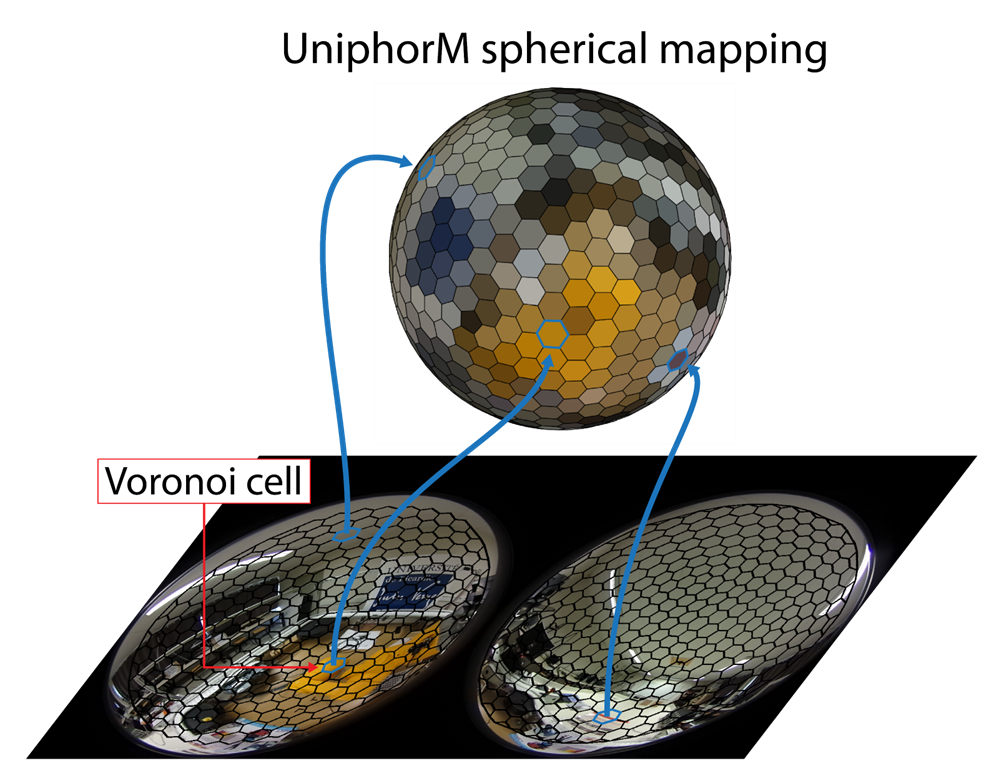

But since these 360-degree images are projected onto a plane for processing, they introduce many distortions, similar to when we want to flatten a world map from a globe. Processing these distortions can be particularly heavy for real-time applications.

The division of the sphere into cells, and their projections in the image.

© Antoine André

Researchers from the Joint Robotics Laboratory (JRL, AIST/CNRS) and the Modeling, Information & Systems laboratory (MIS, Université de Picardie Jules Verne) have proposed a new type of spherical representation, called Uniform spherical mapping of omnidirectional images (UniphorM), where they transform acquired images into a regular spherical mesh type with a geometry that was previously marginal in the state of the art.

They thus showed that a significant reduction in resolution, to less than 1000 pixels only, allows a robot to estimate its orientation in space and recognize locations as well as at much higher resolutions, with minimal algorithmic complexity and memory usage.

The scientists achieved this result by applying their calculations directly on the sphere, subdividing it and assigning each pixel as one of its vertices. The sphere is approximated as an icosahedron, a solid with twenty faces. This approach has been validated on several types of robots, including two aerial drones, a hexacopter and a flying wing, a six-degree-of-freedom robotic arm, and mobile robots.

These different machines were able to perform two important tasks in robotics: estimating their orientation in space and recognizing locations. The first involves realigning their two spherical images to restore a proper set, which was achieved here with 95% success. Location recognition involves being able to recognize images from a route under different lighting, time, weather conditions... A task where the new algorithm proved 40% more effective than the state of the art. The low resolution used is also compatible with privacy protection, as it does not allow facial recognition.

The researchers are now focusing on another important task in robotics: visual servoing. This involves taking an image at a desired position and controlling the robot's movement to reach that position from another.