Artificial intelligence: a technological bubble ready to burst? 🫧

Published by Adrien,

Source: The Conversation under Creative Commons license

Other Languages: FR, DE, ES, PT

Source: The Conversation under Creative Commons license

Other Languages: FR, DE, ES, PT

Follow us on Google News (click on ☆)

Between optimism, overexposure, awareness of their limitations, and disillusionment, artificial intelligence systems still have a limited impact.

Artificial intelligence (AI) is often presented as the next revolution that will transform our lives. Since the launch of ChatGPT in 2022, generative AI has sparked global enthusiasm. In 2023, NVidia, a key player in manufacturing chips used for training AI models, surpassed a market valuation of $1 trillion. And the following year, it exceeded $3 trillion.

However, this enthusiasm is accompanied by doubts. Indeed, while AI is in the media spotlight, its concrete economic impact remains modest, and its adoption by businesses is limited. A recent study estimates that only 5% of companies actively use AI technologies in their processes, whether generative AI, predictive analytics, or automation systems. In some cases, AI is even accused of diverting leaders' attention from more pressing operational issues.

This gap between expectations and concrete results raises the question: is AI simply going through a "hype cycle", where excessive enthusiasm is quickly followed by disillusionment, as observed with other technologies since the 90s? Or are we witnessing a real decline in interest in this technology?

From the origins of AI to ChatGPT: waves of optimism and questions

The history of AI is marked by cycles of optimism and skepticism. As early as the 1950s, researchers envisioned a future filled with machines capable of thinking and solving problems as effectively as humans. This enthusiasm led to ambitious promises, such as creating systems capable of automatically translating any language or perfectly understanding human language.

However, these expectations proved unrealistic given the technological limitations of the time. Thus, the first disappointments led to the "AI winters" in the late 1970s and late 1980s, periods when funding dropped due to the inability of technologies to meet the promises made.

However, the 1990s marked a significant turning point thanks to three key elements: the explosion of big data, increased computing power, and the emergence of more efficient algorithms. The internet facilitated the massive collection of data, essential for training machine learning models. These vast datasets are crucial because they provide the examples needed for AI to "learn" and perform complex tasks. At the same time, advances in processors made it possible to execute advanced algorithms, such as deep neural networks, which are the foundation of deep learning. They enabled the development of AI capable of performing tasks previously out of reach, such as image recognition and automatic text generation.

These enhanced capabilities revived hopes for the revolution anticipated by the pioneers of the field, with AI becoming ubiquitous and effective for a multitude of tasks. However, they also come with major challenges and risks that are beginning to temper the enthusiasm surrounding AI.

A gradual realization of the technical limitations weighing on the future of AI

Recently, stakeholders attentive to AI development have become aware of the limitations of current systems, which could hinder their adoption and limit expected results.

First, deep learning models are often described as "black boxes" due to their complexity, making their decisions difficult to explain. This opacity can reduce user trust, limiting adoption due to fears of ethical and legal risks.

Algorithmic biases are another major issue. Current AI systems use vast amounts of data that are rarely free from biases. AI thus reproduces these biases in their results, as was the case with Amazon's recruitment algorithm, which systematically discriminated against women. Several companies have had to backtrack due to biases detected in their systems. For example, Microsoft withdrew its chatbot Tay after it generated hateful remarks, while Google suspended its facial recognition tool, which was less effective for people of color.

These risks make some companies reluctant to adopt these systems, fearing damage to their reputation.

The environmental footprint of AI is also concerning. Advanced models require significant computing power and generate massive energy consumption. For example, training large models like GPT-3 would emit as much CO₂ as five round trips between New York and San Francisco (approximately 2,500 miles each way). In the context of combating climate change, this raises questions about the relevance of large-scale deployment of these technologies.

Overall, these limitations explain why some initial expectations, such as the promise of widespread and reliable automation, have not been fully realized and face concrete challenges that could dampen enthusiasm for AI.

Towards a measured and regulated adoption of AI?

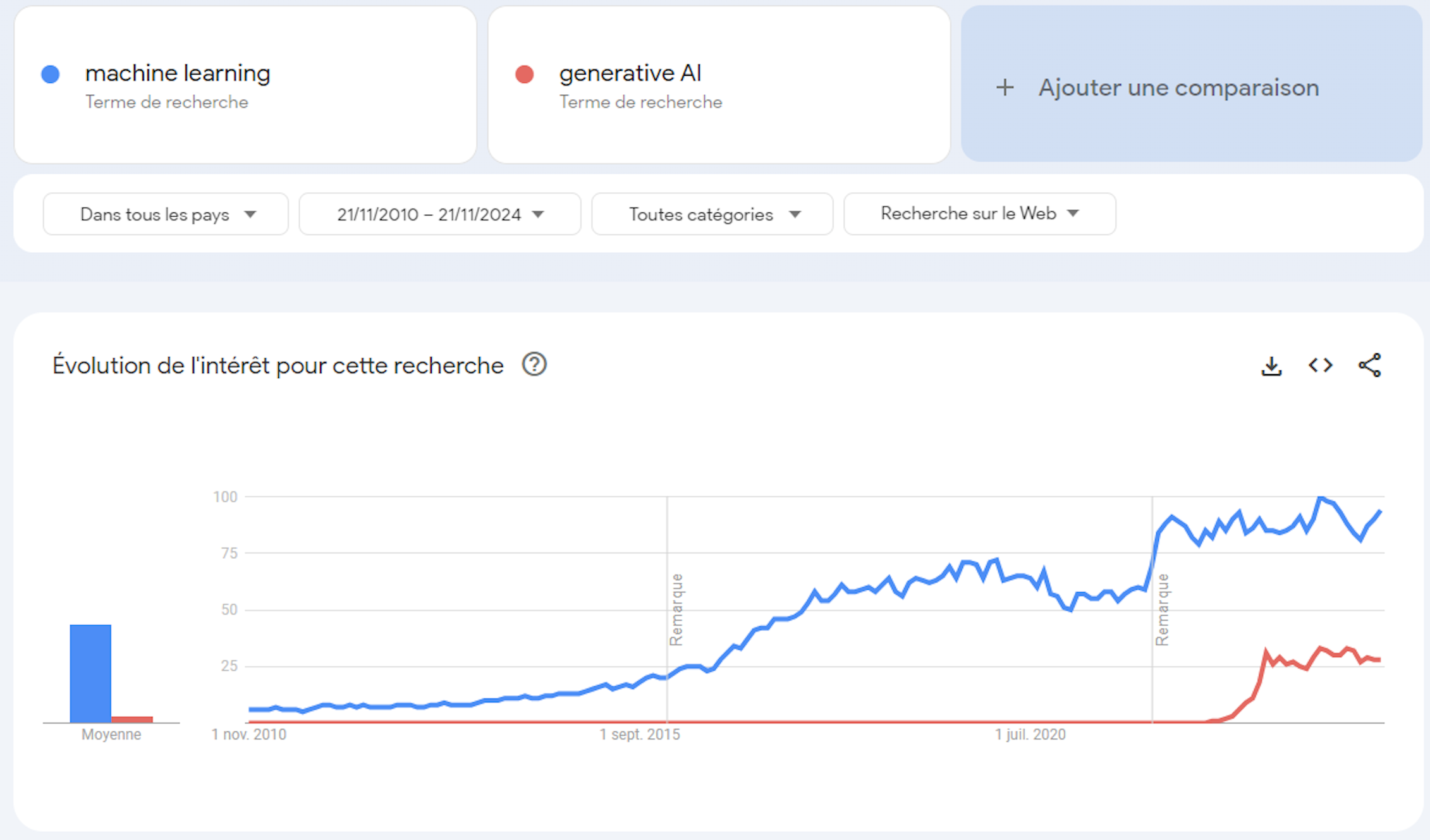

AI, already well integrated into our daily lives, seems too entrenched to disappear, making an "AI winter" like those of the 1970s and 1980s unlikely. Rather than a lasting decline in this technology, some observers suggest the emergence of a bubble. The hype, amplified by the repeated use of the term "revolution," has indeed contributed to often disproportionate excitement and the formation of a certain bubble. Ten years ago, it was machine learning; today, it's generative AI. Different concepts have been popularized in turn, each promising a new technological revolution.

Google trends.

Yet modern AI is far from a "revolution": it is part of a continuity of past research, which has enabled the development of more sophisticated, efficient, and useful models.

However, this sophistication comes at a practical cost, far from the flashy announcements. Indeed, the complexity of AI models partly explains why so many companies find AI adoption difficult. Often large and difficult to master, AI models require dedicated infrastructure and rare expertise, which are particularly costly. Deploying AI systems can therefore be more costly than beneficial, both financially and energetically. For example, it is estimated that an algorithm like ChatGPT costs up to $700,000 per day to operate, due to the immense computing and energy resources required.

To this is added the regulatory question. Principles such as the minimization of personal data collection, required by the GDPR, contradict the very essence of current AI. The AI Act, in force since August 2024, could also challenge the development of these sophisticated systems. It has been shown that AI like OpenAI's GPT-4 or Google's PaLM2 do not meet 12 key requirements of this act. This non-compliance could call into question current AI development methods, affecting their deployment.

All these reasons could lead to the bursting of this AI bubble, prompting us to reconsider the exaggerated representation of its potential in the media. It is therefore necessary to adopt a more nuanced approach, redirecting discourse towards more realistic and concrete perspectives that recognize the limitations of these technologies.

This awareness must also guide us towards a more measured development of AI, with systems better suited to our needs and less risky for society.