Two types of artificial neurons to emulate the brain 💡

Published by Adrien,

Source: The Conversation under Creative Commons license

Other Languages: FR, DE, ES, PT

Source: The Conversation under Creative Commons license

Other Languages: FR, DE, ES, PT

Follow us on Google News (click on ☆)

The brain remains the king of computers. The most sophisticated machines inspired by it, known as "neuromorphic," now comprise up to 100 million neurons, as many as the brain of a small mammal.

These networks of artificial neurons and synapses form the basis of artificial intelligence. They can be emulated in two ways: either through computer simulations or using electronic components reproducing biological neurons and synapses, assembled in "neuroprocessors."

These software and hardware approaches are now compatible, foreshadowing drastic developments in the field of AI.

How does our brain work? Neurons, synapses, networks

The cortex forms the outer layer of the brain. A few millimeters thick and about the size of a dinner napkin, it contains over 10 billion neurons that process information in the form of electrical impulses called "action potentials" or "spikes."

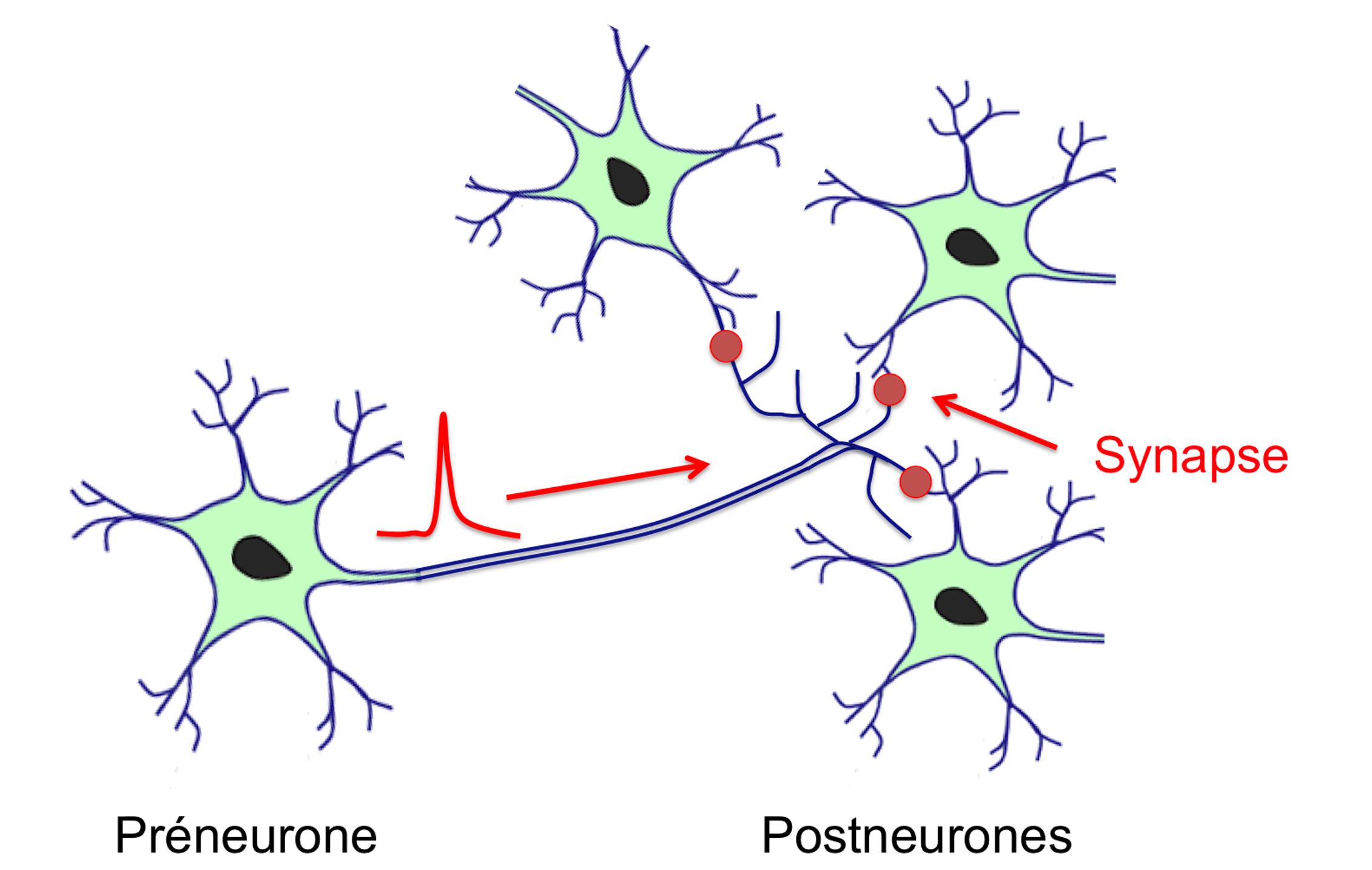

The connection point between a neuron emitting a spike (the presynaptic neuron) and the receiving neuron (the postsynaptic neuron) is the synapse. Each neuron is connected to about 10,000 other neurons via synapses: the connectivity of such a network, the connectome, is therefore astonishing.

The function of neurons is fixed: they sum signals from the synapses, and if that sum reaches a certain threshold, they generate an action potential or spike that propagates through the axon. It's remarkable to note that part of the processing is analog (the summing of synaptic signals is continuous), while the other is binary (the neuronal response is either to generate a spike or nothing).

Thus, the neuron can be thought of as an analog computer associated with a digital communication system. Unlike neurons, synapses are plastic, meaning they can adjust the intensity of signals transmitted to the postsynaptic neuron and have a "memory" effect, as a synapse's state can persist over time.

Network of biological neurons and propagation of action potentials or "spikes."

Alain Cappy, Author provided

Anatomically, the cortex is divided into about a million cortical columns, which are networks of neurons with the same interconnection architecture. Cortical columns can be considered elementary processors, with neurons as basic devices and synapses as the memory.

Functionally, cortical columns form a hierarchical network with connections going from the bottom (sensory receptors) to the top, enabling abstractions, but also from the top down, allowing predictions: the processors in our brain work both ways.

The main challenge for AI is to emulate the cortex's functionalities with artificial networks of neurons and synapses. This idea is not new, but it has accelerated in recent years with deep learning.

Using software to simulate neural and synaptic networks

The software approach aims to simulate neural and synaptic networks with a standard computer. It involves three key elements: mathematical models of neurons and synapses, an interconnection architecture for neurons, and a learning rule that adjusts the "synaptic weights."

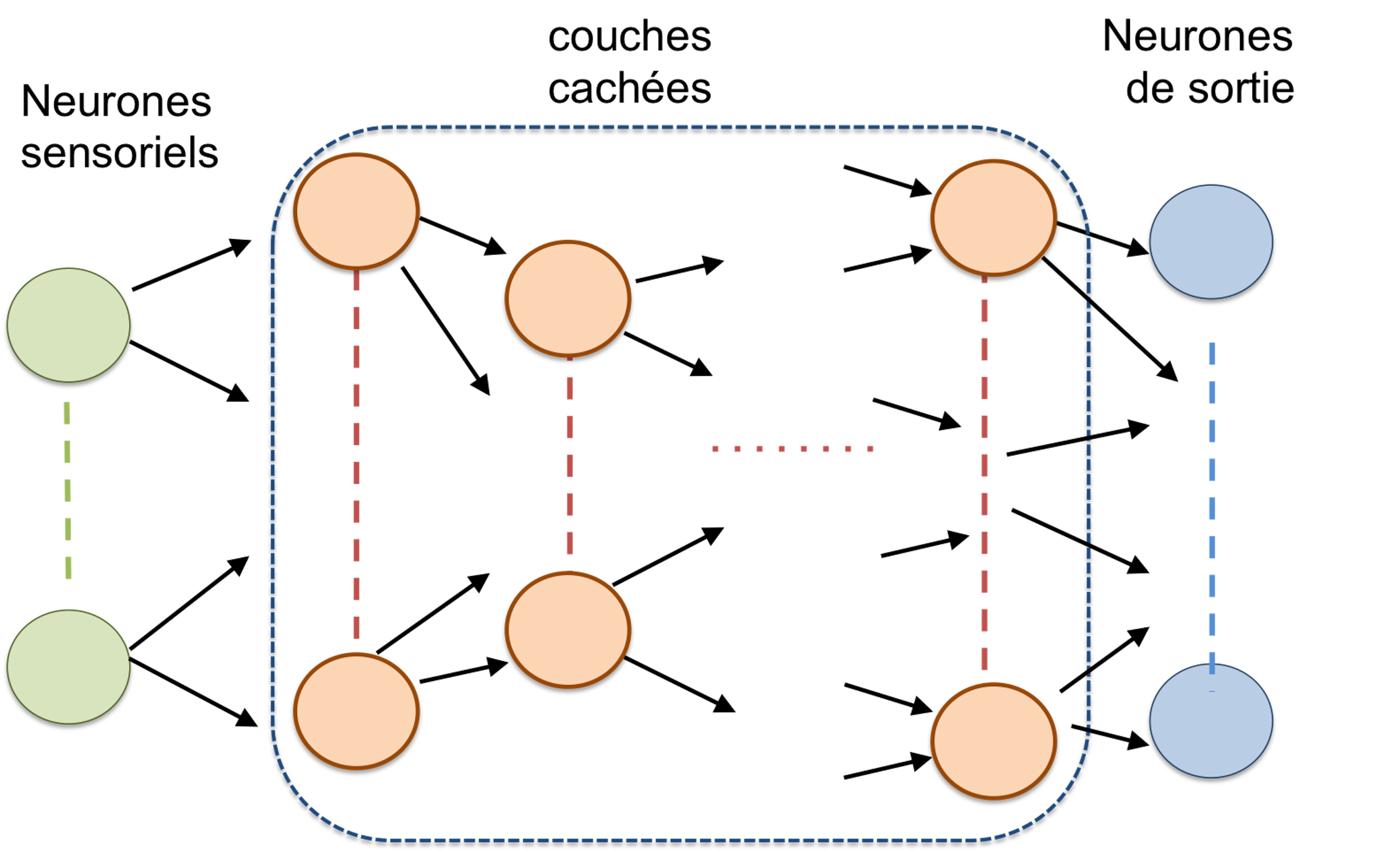

Architecture of a "feedforward" neural network. The parameters of such a network include the number of layers, the number of neurons per layer, and the interconnection rule from one layer to the next. For a given task, these parameters are generally chosen empirically and are often oversized. Note that the number of layers can be very high: more than 150 for Microsoft's ResNet, for example.

Alain Cappy, Author provided

Mathematical models of neurons range from the simplest to the most biologically realistic, but simplicity is essential when simulating large networks—several thousand or even millions of neurons—to limit computation time. The architecture of artificial neural and synaptic networks usually includes an "input layer" containing "sensory neurons" and an output layer providing the results. In between is an intermediate network that can have two main forms: "feedforward" or "recurrent."

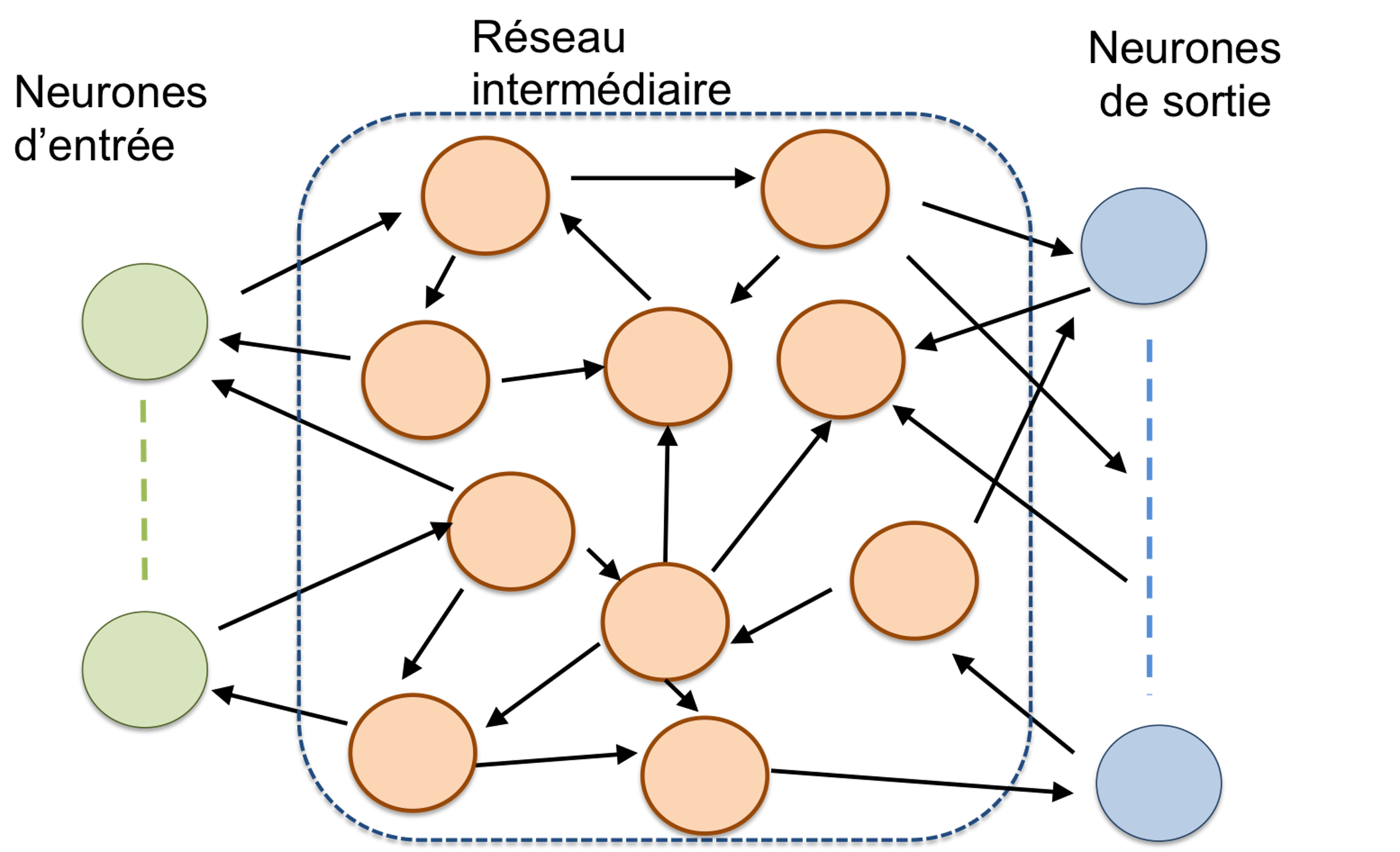

In a feedforward network, information is transferred from one "layer" to the next, without feedback loops to previous layers. By contrast, in recurrent networks, connections can exist from one layer N to previous ones, N-1, N-2, etc. Consequently, the state of a neuron at time t depends on both the input data at time t and the state of other neurons at time t-Δt, significantly complicating the learning process.

Learning aims to determine the weight of each synapse, meaning how strongly the spike from a presynaptic neuron is transmitted to the postsynaptic neuron, so that the network can meet a predefined goal. There are two main types of learning: supervised, where a "teacher" (master) knows the expected result for each input, and unsupervised, where no such "teacher" is present.

Recurrent neural networks contain feedback loops. In recurrent networks, the "time" variable is an essential parameter.

Alain Cappy, Author provided

In supervised learning, the comparison between the result obtained for an input and the "master's" result allows the adjustment of synaptic weights. In unsupervised learning, a rule like the famous Hebb's rule enables the synaptic weights to evolve over time through various trials.

Building hardware-based neural and synaptic networks

The hardware approach involves designing and fabricating neuroprocessors that emulate neurons, synapses, and interconnections. The most advanced technology relies on standard semiconductor processes (known as CMOS), used in our computers, tablets, and smartphones. It's the only sufficiently mature process to build circuits with several thousand or millions of neurons and synapses capable of performing the complex tasks required by AI. Still, technologies based on new devices are also emerging, such as spintronics or memristors.

Like biological networks, hardware-based artificial neural and synaptic networks often combine an analog part for the integration of synaptic signals and a digital part for communications and the storage of synaptic weights.

This mixed approach is used in the most advanced technologies, such as the chips in the European Human Brain Project, those from Intel, or IBM's TrueNorth. For example, the TrueNorth chip combines 1 million neurons and 256 million programmable synapses, distributed across 4,096 neuromorphic cores—comparable to biological cortical columns—interconnected by a communication network. The power consumption of the TrueNorth chip is 20 mW per cm2 compared to the 50 to 100 W per cm2 consumed by a conventional microprocessor, an energy gain of over 1,000 times (it's standard to consider "power per unit area" since the chips have different sizes).

Will the future be hardware or software?

Software-based artificial neural and synaptic networks elegantly solve many problems, especially in the fields of image and sound processing and, more recently, text generation. However, learning recurrent artificial neural and synaptic networks remains a significant challenge, whether through supervised or unsupervised methods. Another issue: the computational power required becomes enormous for large artificial neural and synaptic networks needed to solve complex problems.

For example, the impressive results of the conversational program "GPT-3" rely on the largest artificial neuron and synapse network ever built. It possesses 175 billion synapses and requires significant computational power consisting of 295,000 processors that consume several megawatts of electrical power—equivalent to the consumption of a town of several thousand inhabitants. This is in stark contrast to the few watts consumed by a human brain performing the same task!

The hardware approach and neuroprocessors are far more energy-efficient, but they face a major challenge: scaling up, that is, the production of millions or billions of neurons and synapses and their interconnection network.

Looking ahead, given that neuroprocessors use the same CMOS technology as conventional processors, the co-integration of software and hardware approaches could open the door to a new way of designing information processing, leading to efficient and energy-saving AI.