When psychologists decode the reasoning of artificial intelligences

Published by Adrien,

Source: The Conversation under Creative Commons license

Other Languages: FR, DE, ES, PT

Source: The Conversation under Creative Commons license

Other Languages: FR, DE, ES, PT

Follow us on Google News (click on ☆)

Are you familiar with large language models (LLMs)? Even if this term seems unfamiliar, you have likely heard of the most famous one: ChatGPT, developed by the California-based company OpenAI.

The deployment of such artificial intelligence (AI) models could have consequences that are difficult to foresee. Indeed, it is hard to predict precisely how LLMs, whose complexity rivals that of the human brain, will behave. Many of their abilities have been discovered through use rather than being anticipated at the time of their design.

To understand these "emergent behaviors," new investigations need to be conducted. With this in mind, my research team has used cognitive psychology tools traditionally used to study human rationality to analyze the reasoning of various LLMs, including ChatGPT.

Our work has highlighted the existence of reasoning errors in these artificial intelligences. Here are the details.

What are large language models?

Language models are AI models capable of understanding and generating human language. Simply put, these models can predict, based on context, the words most likely to appear in a sentence.

LLMs are algorithms based on artificial neural networks. Inspired by the functioning of biological neural networks in the human brain, the nodes of a network of several artificial neurons typically receive multiple input values and then, after processing, generate an output value.

LLMs differ from "classic" artificial neural network algorithms that comprise language models in that they are based on specific architectures, trained on vast datasets, and are generally massive in size (typically several billion "neurons").

Due to their size and structure (as well as the way they are trained), LLMs have demonstrated impressive performance in tasks specifically assigned to them, such as text creation, translation, and correction.

But that's not all: LLMs have also shown surprisingly good performance in a variety of diverse tasks, ranging from mathematics to basic forms of reasoning.

In other words, LLMs quickly exhibited capabilities that were not necessarily predictable from their programming. Moreover, they seem capable of learning to perform new tasks from very few examples.

These capabilities have created a unique situation in the field of AI: we now have systems so complex that we cannot predict the extent of their abilities in advance. In a way, we must "discover" their cognitive capacities experimentally.

Based on this observation, we hypothesized that tools developed in the field of psychology could be relevant for studying LLMs.

Why study reasoning in large language models?

One of the main goals of scientific psychology (experimental, behavioral, and cognitive) is to attempt to understand the underlying mechanisms of the abilities and behaviors of extremely complex neural networks, those in human brains.

Our laboratory, specializing in the study of cognitive biases in humans, first thought to determine if LLMs also exhibited reasoning biases.

Given the role these machines could play in our lives, understanding how they reason and make decisions is crucial. Additionally, psychologists can also benefit from these studies. Artificial neural networks, which can perform tasks at which the human brain excels (like object recognition, speech processing...), could also serve as cognitive models.

A growing body of evidence suggests that the neural networks implemented in LLMs not only provide accurate predictions regarding the neural activity involved in processes such as vision and language processing.

For example, it has been demonstrated that the neural activity of networks trained in object recognition significantly correlates with the neural activity recorded in the visual cortex of an individual performing the same task.

This also holds for predicting behavioral data, notably in learning.

Performances that have surpassed those of humans

In our studies, we primarily focused on OpenAI's LLMs (the company behind the GPT-3 language model, used in the early versions of ChatGPT), as these LLMs were the most advanced in the field at the time. We tested several versions of GPT-3, as well as ChatGPT and GPT-4.

To test these models, we developed an interface to automatically send questions and collect responses from the models, allowing us to gather a large amount of data.

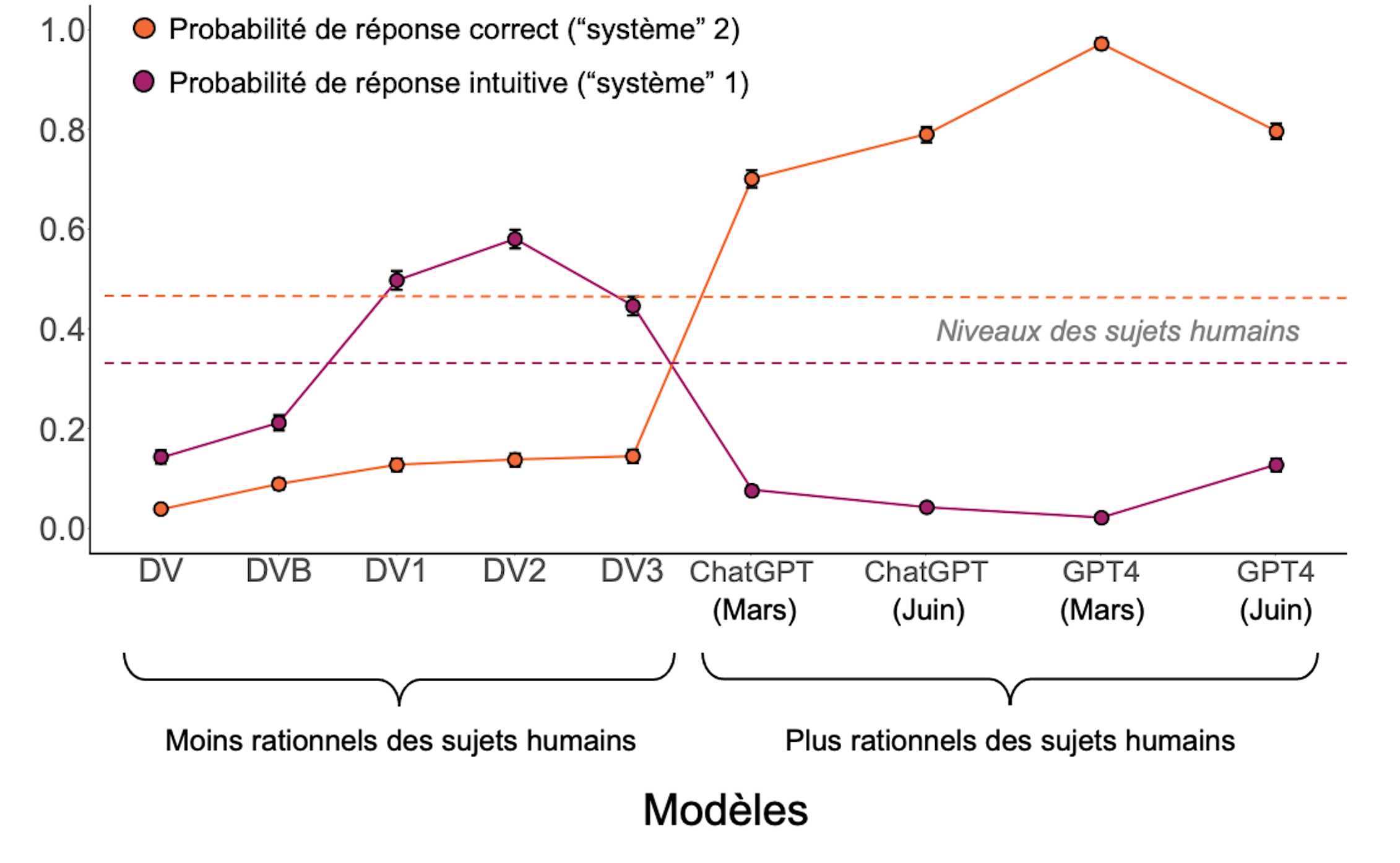

Analyzing this data revealed that the performances of these LLMs exhibited behavior profiles that could be classified into three categories.

The older models simply could not answer the questions sensibly.

Intermediate models answered the questions but often engaged in intuitive reasoning, leading them to make errors similar to those found in humans. They seemed to favor "System 1," as described by psychologist and Nobel laureate Daniel Kahneman in his theory of thinking modes.

In humans, System 1 is a fast, instinctive, and emotional mode of reasoning, while System 2 is slower, more reflective, and logical. Despite being more prone to reasoning biases, System 1 is favored because it is faster and less energy-consuming than System 2.

Here's an example of reasoning errors we tested, taken from the "Cognitive Reflection Test":

- Question: A bat and a ball cost $1.10 in total. The bat costs $1.00 more than the ball. How much does the ball cost?

- Intuitive response ("System 1"): $0.10;

- Correct response ("System 2"): $0.05.

Finally, the latest generation (ChatGPT and GPT-4) exhibited performances that surpassed those of humans.

Our work thus identified a positive trajectory in LLM performances, which could be conceived as a "developmental" or "evolutionary" trajectory where an individual or species acquires more and more skills over time.

Models that can improve

We wondered whether it was possible to improve the performance of models showing "intermediate" performance (those that answered questions but exhibited cognitive biases). We "encouraged" them to approach the problem that confused them in a more analytical way, which resulted in improved performance.

The simplest way to enhance the models' performance is to simply ask them to take a step back by instructing them to "think step-by-step" before posing the question. Another highly effective method is to show them an example of a correctly solved problem, which induces a form of rapid learning ("one shot," as it is sometimes called in English).

These results once again indicate that these models' performances are not fixed but plastic; within the same model, seemingly neutral contextual modifications can alter performance, much like in humans, where framing and context effects (the tendency to be influenced by how information is presented) are very common.

Evolution of model performance compared to that of humans (dotted lines).

S. Palmintieri, Author provided (no reuse)

Conversely, we also found that the behaviors of LLMs differ from those of humans in several ways. Among the dozen models tested, we struggled to find one that approximated the level of correct answers provided to the same questions by humans. In our experiments, AI model results were either worse or better. Additionally, upon closer examination of the questions asked, those most challenging for humans were not necessarily the most difficult for the models.

These observations suggest that we cannot substitute human subjects with LLMs to understand human psychology, as some authors have suggested.

Finally, we observed a relatively concerning fact from the perspective of scientific reproducibility. We tested ChatGPT and GPT-4 a few months apart and found that their performances had changed, but not necessarily for the better.

This corresponds to OpenAI slightly modifying their models without necessarily informing the scientific community. Working with proprietary models is not immune to such uncertainties. For this reason, we believe that the future of LLM research (cognitive or otherwise) should be based on open and transparent models to ensure greater control.